Apple-Nvidia collaboration triples speed of AI model production

Share:

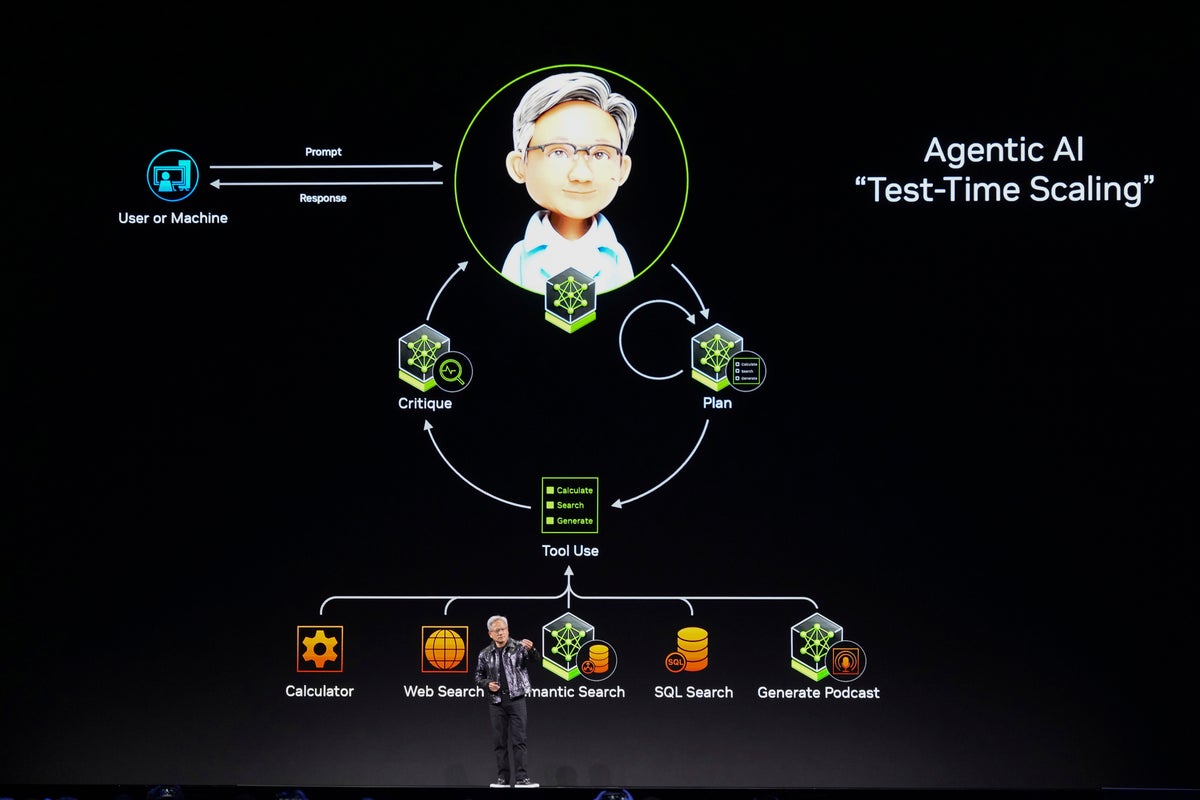

Training models for machine learning is a processor-intensive task. Apple's latest machine learning research could make creating models for Apple Intelligence faster, by coming up with a technique to almost triple the rate of generating tokens when using Nvidia GPUs.

One of the problems in creating large language models (LLMs) for tools and apps that offer AI-based functionality, such as Apple Intelligence, is inefficiencies in producing the LLMs in the first place. Training models for machine learning is a resource-intensive and slow process, which is often countered by buying more hardware and taking on increased energy costs.

Earlier in 2024, Apple published and open-sourced Recurrent Drafter, known as ReDrafter, a method of speculative decoding to improve performance in training. It used an RNN (Recurrent Neural Network) draft model combining beam search with dynamic tree attention for predicting and verifying draft tokens from multiple paths.

This sped up LLM token generation by up to 3.5 times per generation step versus typical auto-regressive token generation techniques. In a post to Apple's Machine Learning Research site, it explained that alongside existing work using Apple Silicon, it didn't stop there. The new report published on Wednesday detailed how the team applied the research in creating ReDrafter to make it production-ready for use with Nvidia GPUs.

Nvidia GPUs are often employed in servers used for LLM generation, but the high-performance hardware often comes at a hefty cost. It's not uncommon for multi-GPU servers to cost in excess of $250,000 apiece for the hardware alone, let alone any required infrastructure or other connected costs.