Democrats and Republicans have a common enemy: pornographic ‘deepfakes’

Share:

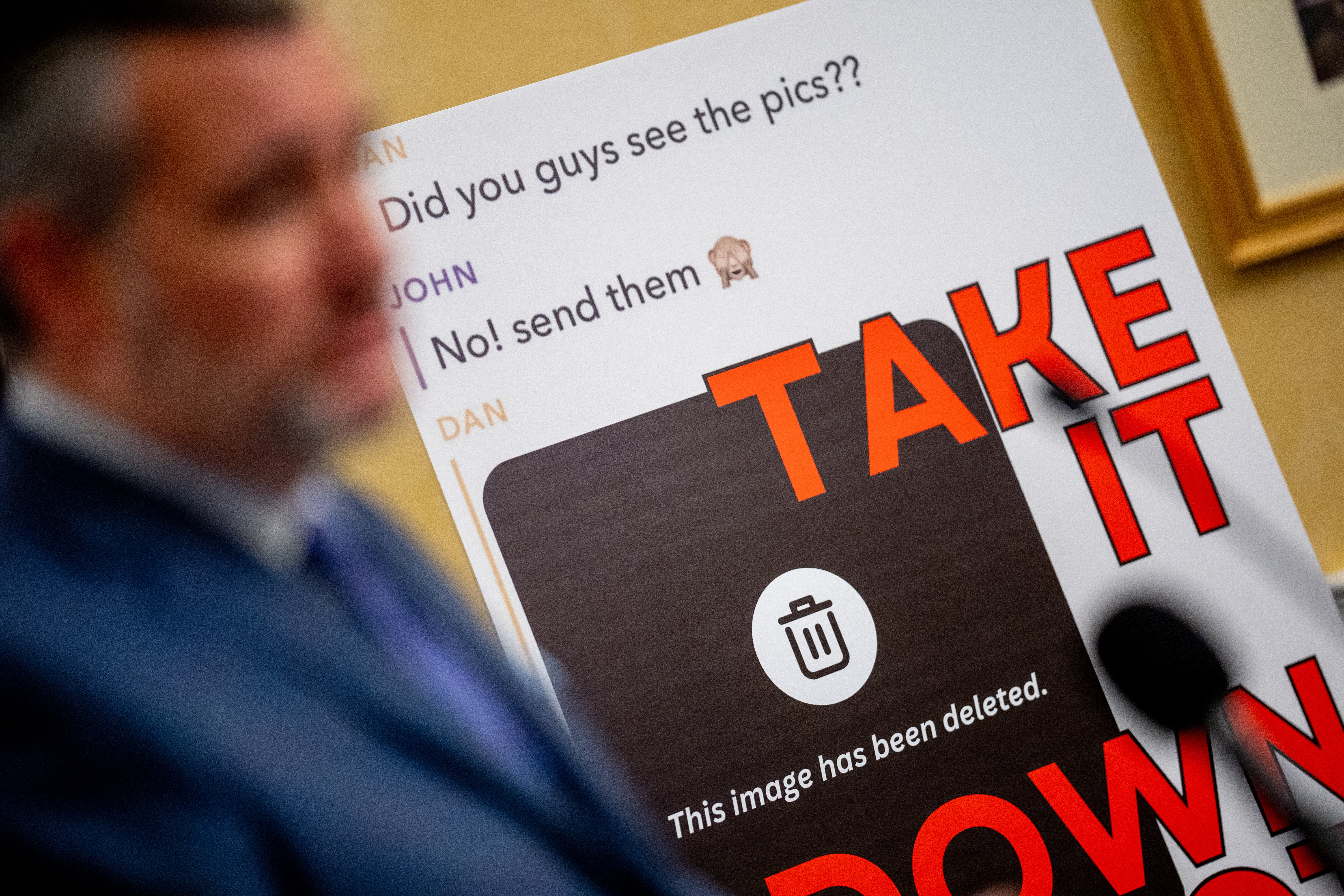

Ted Cruz and AOC are unlikely allies forcing Big Tech to take down nonconsenual images, but Elon Musk’s antics derailed their efforts, Alex Woodward reports. Last fall, Elliston Berry woke up to a barrage of text messages. A classmate ripped photographs from her Instagram, manipulated them into fake nude photographs, and shared them with other teens from her school. They were online for nine months. She was 14 years old.

![[Congresswoman Alexandria Ocasio-Cortez sponsored legislation that gives victims of deepfakes an avenue to file federal lawsuits to protect them]](https://static.independent.co.uk/2024/12/18/23/1c8ce769753447bbabf98e941a9f76f2.jpg)

“I locked myself in my room, my academics suffered, and I was scared,” she said during a recent briefing. She is not alone. So-called non-consensual intimate images (NCII) are often distributed as pornographic “deepfakes” using artificial intelligence that manipulates images of existing adult performers to look like the victims — targeting celebrities, lawmakers, middle- and high schoolers, and millions of others.

![[Democratic Senator Amy Klobuchar co-sponsored legislation to make publishing nonconsensual deepfakes a federal crime]](https://static.independent.co.uk/2024/12/18/23/a201ecf73f39444088d8d48de06fb6ba.jpg)

There is no federal law that makes it a crime to generate or distribute such images. Elliston and dozens of other victims and survivors and their families are now urging Congress to pass a bill that would make NCII a federal crime — whether real or created through artificial intelligence — with violators facing up to two years in prison.

![[Deepfake attacks targeting Taylor Swift brought renewed focus to national legislation to force social media platforms and websites to remove nonconsenual pornographic images]](https://static.independent.co.uk/2024/12/18/23/2188665056.jpg)

The legislation was attached to a broader bipartisan government funding bill with support from both Republican and Democratic members of Congress, including a final push from Ted Cruz and Amy Klobuchar — two senators who are rarely if ever on the same side of an issue.