Meta’s content moderation changes ‘hugely concerning’, says Molly Rose Foundation

Meta’s content moderation changes ‘hugely concerning’, says Molly Rose Foundation

Share:

Charity set up after 14-year-old’s death concerned as Zuckerberg realigns company with Trump administration. Mark Zuckerberg’s move to change Meta’s content moderation policies risks pushing social media platforms back to the days before the teenager Molly Russell took her own life after viewing thousands of Instagram posts about suicide and self-harm, campaigners have claimed.

The Molly Rose Foundation, set up after the 14-year-old’s death in November 2017, is now calling on the UK regulator, Ofcom, to “urgently strengthen” its approach to the platforms. Earlier this month, Meta announced changes to the way it vets content on platforms used by billions of people as Zuckerberg realigned the company with the Trump administration.

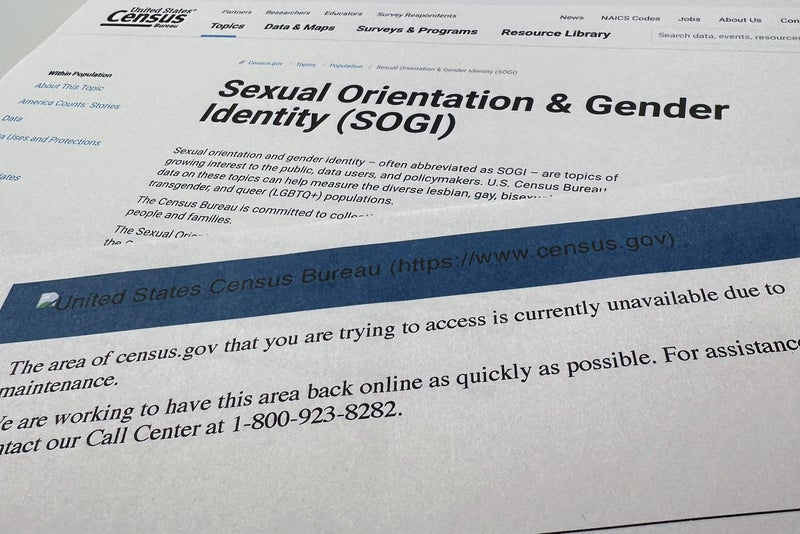

In the US, factcheckers are being replaced by a system of “community notes” whereby users will determine whether content is true. Policies on “hateful conduct” have been rewritten, with injunctions against calling non-binary people “it” removed and allegations of mental illness or abnormality based on gender or sexual orientation now allowed.

Meta insists content about suicide, self-injury and eating disorders will still be considered “high-severity violations” and it “will continue to use [its] automated systems to scan for that high-severity content”. But the Molly Rose Foundation is concerned about the impact of content that references extreme depression and normalises suicide and self-harm behaviours, which, when served up in large volumes, can have a devastating effect on children.