Flawed data can have devastating consequences. When you purchase through links on our site, we may earn an affiliate commission. Here’s how it works. In 1847, Hungarian physician Ignaz Semmelweis made a revolutionary yet simple observation: when doctors washed their hands between patients, mortality rates plummeted. Despite the clear evidence, his peers ridiculed his insistence on hand hygiene. It took decades for the medical community to accept what now seems obvious—that unexamined contaminants could have devastating consequences.

![[Audio Technica Cartilage conduction headphones]](https://vanilla.futurecdn.net/cyclingnews/media/img/missing-image.svg)

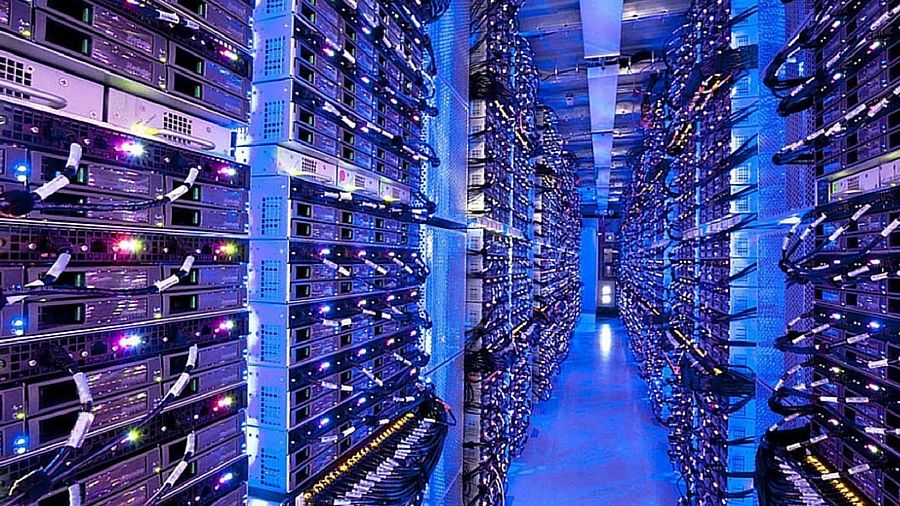

Today, we face a similar paradigm shift in artificial intelligence. Generative AI is transforming business operations, creating enormous potential for personalized service and productivity. However, as organizations embrace these systems, they face a critical truth: Generative AI is only as good as responsibility for the data it's built on—though in a more nuanced way than one might expect. Like compost nurturing an apple tree, or a library of autobiographies nurturing a historian, even "messy" data can yield valuable results when properly processed and combined with the right foundational models. The key lies not in obsessing over perfectly pristine inputs, but in understanding how to cultivate and transform our data responsibly.

Just as invisible pathogens could compromise patient health in Semmelweis's era, hidden data quality issues can corrupt AI outputs, leading to outcomes that erode user trust and increase exposure to costly regulatory risks, known as in integrity breaches. Inrupt's security technologist Bruce Schneier has argued that accountability must be embedded into AI systems from the ground up. Without secure foundations and a clear chain of accountability, AI risks amplifying existing vulnerabilities and eroding public trust in technology. These insights echo the need for strong data hygiene practices as the backbone of trustworthy AI systems.

High-quality AI relies on thoughtful data curation, yet data hygiene is often misunderstood. It's not about achieving pristine, sanitized datasets—rather, like a well-maintained compost heap that transforms organic matter into rich soil, proper data hygiene is about creating the right conditions for AI to flourish. When data isn't properly processed and validated, it becomes an Achilles' heel, introducing biases and inaccuracies that compromise every decision an AI model makes. Schneier's focus on "security by design" underscores the importance of treating data hygiene as a foundational element of AI development—not just a compliance checkbox.

While organizations bear much of the responsibility for maintaining clean and reliable data, empowering users to take control of their own data introduces an equally critical layer of accuracy and trust. When users store, manage, and validate their data through personal "wallets"—secure, digital spaces governed by the W3C's Solid standards—data quality improves at its source. This dual focus on organizational and individual accountability ensures that both enterprises and users contribute to cleaner, more transparent datasets. Schneier's call for systems that prioritize user agency resonates strongly with this approach, aligning user empowerment with the broader goals of data hygiene in AI.

With European regulations like the Digital Services Act (DSA) and Digital Markets Act (DMA), expectations for AI data management have heightened. These regulations emphasize transparency, accountability, and user rights, aiming to prevent data misuse and improve oversight. To comply, companies must adopt data hygiene strategies that go beyond basic checklists. As Schneier pointed out, transparency without robust security measures is insufficient. Organizations need solutions that incorporate encryption, access controls, and explicit consent management to ensure data remains secure, transparent, and traceable. By addressing these regulatory requirements proactively, businesses can not only avoid compliance issues but also position themselves as trusted custodians of user data.

Generative AI has tremendous potential, but only when its data foundation is built on trust, integrity, and responsibility. Just as Semmelweis's hand-washing protocols eventually became medical doctrine, proper data hygiene must become standard practice in AI development. Schneier's insights remind us that proactive accountability—where security and transparency are integrated into the system itself—is critical for AI systems to thrive.

By adopting tools like Solid, organizations can establish a practical, user-centric approach to managing data responsibly. Now is the time for companies to implement data practices that are not only effective but also ethically grounded, setting a course for AI that respects individuals and upholds the highest standards of integrity. The future of generative AI lies in its ability to enhance trust, accountability, and innovation simultaneously. As Bruce Schneier and others have emphasized, embedding security and transparency into the very fabric of AI systems is no longer optional—it's imperative. Businesses that prioritize robust data hygiene practices, empower users with control over their data, and embrace regulations like the DSA and DMA, are not only mitigating risks but also leading the charge towards a more ethical AI landscape.