Describing security and transparency as “mutually reinforcing pillars essential to building public confidence in AI”, Mr Dudfield added: “If the Government pivots away from the issues of what data is used to train AI models, it risks outsourcing those critical decisions to the most powerful internet platforms rather than exploring them in the democratic light of day.”.

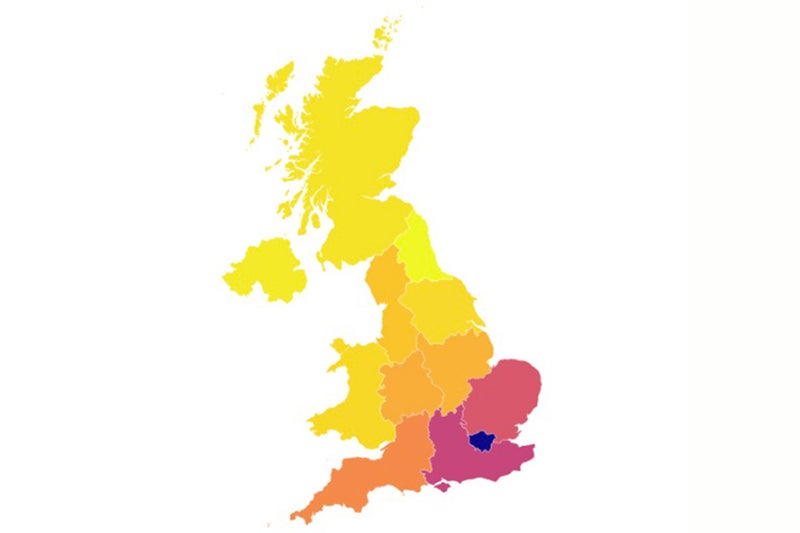

Pointing to a series of scandals involving bias in AI in Australia, the Netherlands and the UK, Mr Birtwistle said there was a “real risk that inaction on risks like bias will lead to public opinion turning against AI”.

Established in 2023, then-prime minister Rishi Sunak said the institute would “advance the world’s knowledge of AI safety”, including exploring “all the risks from social harms like bias and misinformation, through to the most extreme risks of all”.

Crime and security concerns already form part of the institute’s remit, but it currently also covers wider societal impacts of artificial intelligence, the risk of AI becoming autonomous and the effectiveness of safety measures for AI systems.

As well as the AISI’s new name, Mr Kyle announced the creation of a new “criminal misuse” team within the institute to tackle risks such as AI being used to create chemical weapons, carry out cyber attacks and enable crimes such as fraud and child sexual abuse.