How Visual Intelligence gets more powerful on iPhone 16 in iOS 18.3

How Visual Intelligence gets more powerful on iPhone 16 in iOS 18.3

Share:

Visual Intelligence is exclusive to the iPhone 16 series. Last updated 12 hours ago. Visual Intelligence may be the most powerful Apple Intelligence feature. Here's what it is, how it works, and we'll go through several different real world examples. Apple added Visual Intelligence with iOS 18.2 and you'll need an iPhone 16 or iPhone 16 Pro to use it. Sorry, iPhone 15 Pro users. It's filed under the umbrella of Apple Intelligence features. It's one of the few Apple Intelligence features that is exclusive to the iPhone 16 family.

This AI feature uses the camera on your iPhone to scan the environment and provide information. If that feels a little abstract - don't worry, we're about to show you a bunch of demos in and out of our studio to show how it can help you. To activate Visual Intelligence, you press and hold the camera control on the lower-right side of your iPhone. That compares to a short press that opens the Camera app.

Your phone will vibrate as the camera opens with a sleek and colorful animation. You'll see a new interface that shows a live feed of the camera with an "ask" button, a "search" button, and a capture button in the center. Let's start with the most basic of uses. Open Visual intelligence, point it at something, and snap. Then you can get information from chatGPT about what you're looking at or you can do a Google image search to learn more about it.

For example, we can take a photo of all these different cables we have in the studio. Each time, Visual Intelligence is able to identify which ones they are and provide some basic information about them. If you happen across a cable you don't know much about, you can ask a follow up question for more information. Like this detailed breakdown of what a DisplayPort cable can do. We also have this old GameBoy Color lying around. A quick scan with Visual Intelligence and we can ask what year it was released.

Those both get you information via ChatGPT. Alternatively, you can use an image search. When we scanned a Playstation 5 DualSense controller, we could see this specific colorway. We then could jump right into the Walmart app to buy it. Outside of asking questions or searching photos, it can also aid with text and numbers. When you point it at a block of text, new buttons appear that are context aware. You can either generate an AI summary or have your phone read it aloud.

If you happen to be traveling and it detects text in another language, a translate button will appear. When you tap it, it snaps a photo and replaces the text on the image with your default language. We tested it by translating a Spanish restaurant menu. It's great because there is no need to use any other apps and it worked automatically. Lastly with text and numbers is solving equations. Something that would have been great when we were still in school.

You don't even need to type it in - just scan it with visual intelligence and ask it to solve it for you. It even broke down all the different steps for us in our example equation. While it wasn't entirely specific, Visual Intelligence also helped with gathering macros from your food. Like the other demos, we just pointed it at our plate and it identified the food and gave us rough ranges for what we were eating.

We had a small handful of popcorn and we asked Visual Intelligence how many calories it was. It told us a handful like that had a range of calories, based on the type and how it was cooked. If you combine that range with what you know of the popcorn (SmartPop, air popped, movie theater style, et cetera), you can get a decent idea of the actual value. Similarly, we took a photo of a glazed donut and asked how many carbs it contained. Once more, it gave us a range of possible carbs.

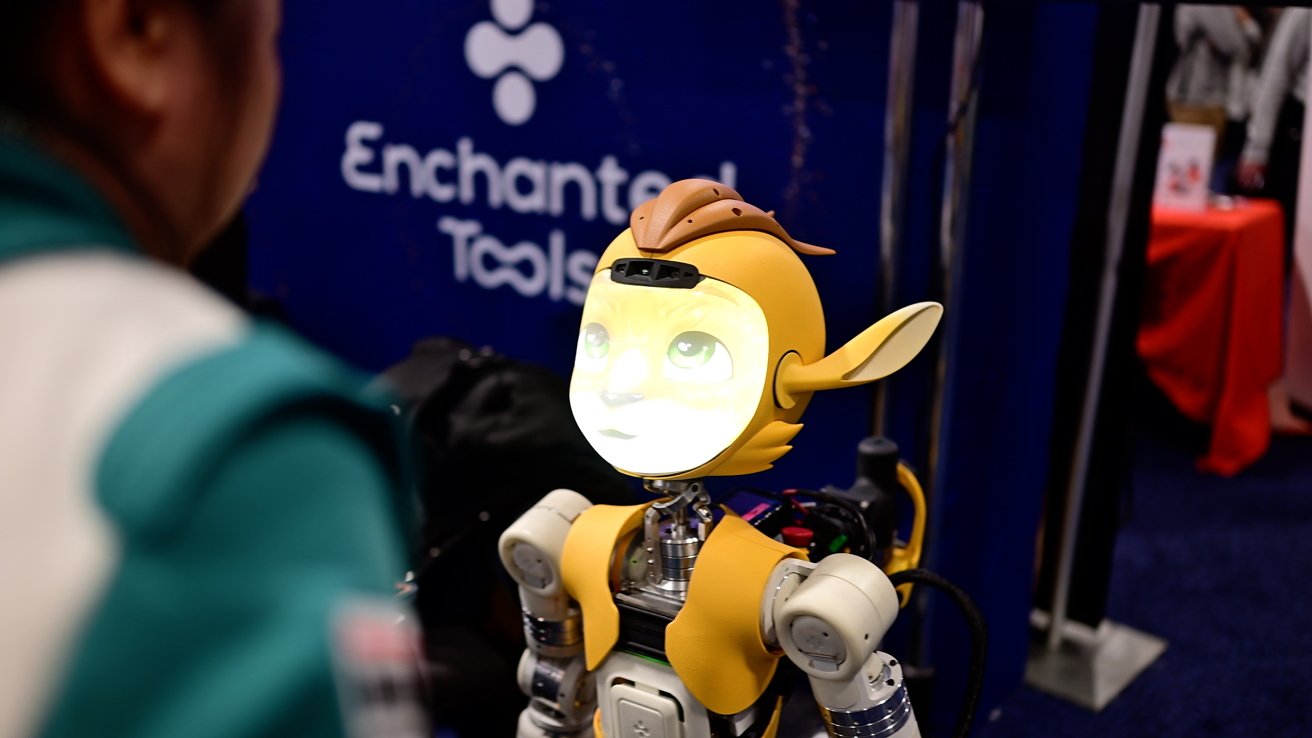

Features like these could be critical to certain users. Like newly-diagnosed type 1 diabetics, which are usually children, who may not be great yet at carb counting. Hopefully this gets a bit better to be more accurate as the AI models improve. With iOS 18.3, Apple added some new features to Visual Intelligence. Visual Intelligence can now identify plants and animals automatically. When we pointed it at a plant in our kitchen, a bubble appeared at the top with the plant's name as soon as it recognized it. If you tap the bubble, it shows you additional information from Wikipedia.

Works with animals too, though of course if you're doing a dog, you have to have somewhat of a purebred. No AI will be able to accurately deduce a dog's pedigree just based on a photo. Our pup, Brooklyn, was labeled a flat coat retriever. While she may have some retriever in her, she's not a purebred flat coat by any means. Other, more generic animals would likely be better suited for this. Or at least general identification.

-0-15-screenshot-xl.jpg)